Author: Nikola Mrksic, PolyAI

Many of us are already interacting daily with AI-infused conversational assistants like Siri and Alexa, and businesses around the world are trying to figure out how to get in on the action. The opportunities for conversational AI in business are endless, but there’s a big problem – many companies have been burned before.

Until recently, commercial conversational ‘AI’ has focussed largely on pre-architected conversations. The chatbot is the perfect example of this. Some poor souls have been tasked with pre-empting every possible direction a conversation could take, and scripting all the potential ways customers could communicate.

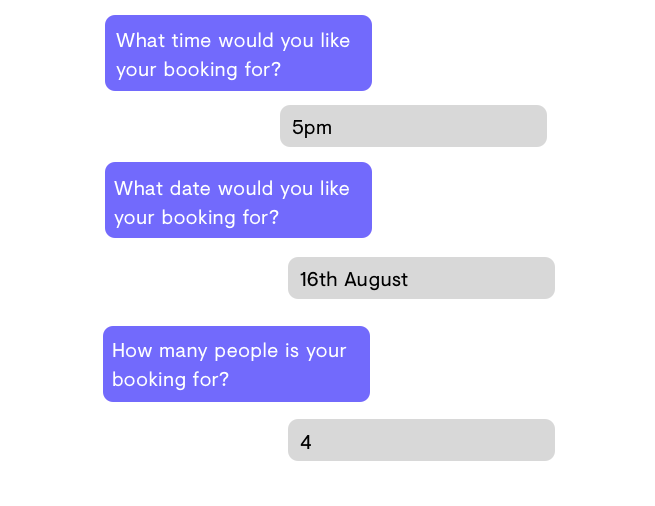

Take this example of a customer trying to book a table at a restaurant.

It’s a pretty simple conversation really. There’s only a couple of ways to say you want your booking for 5pm right? You might say ‘five’, or ‘five in the evening’, or ‘five in the afternoon’, but it would be pretty quick and painless to list all of these options out.

But that’s not really how people speak. People like to ask to ask questions, so they might ask for your opening hours before they decide what time they want to come in. People also like to go back on themselves – if you there was no availability for the date you wanted, you might want to choose a different date, and book for a different number of people.

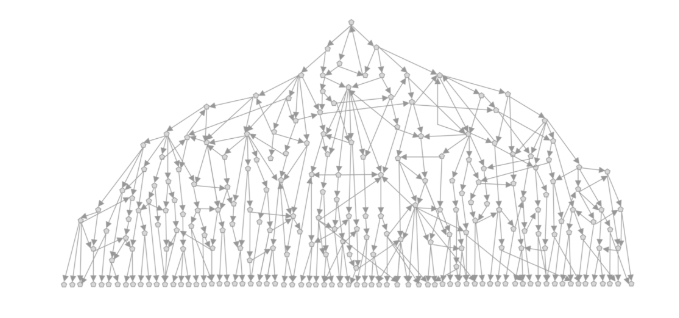

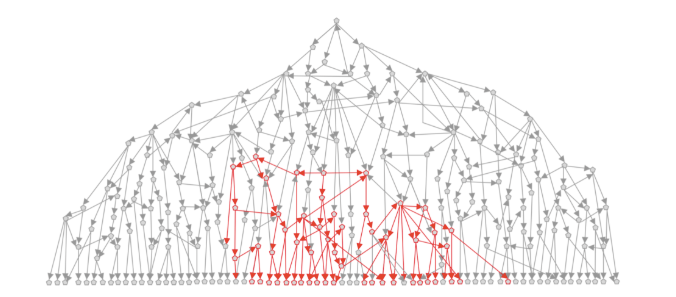

Pretty soon your simple conversational flow ends up looking like this:

Still, it’s not a problem, right? As long as it works, and if you’re paying engineers to get the job done, what do you care about how it’s done? And that’s a legitimate mindset. Good technology is evidenced by the outcomes, not the neatness of code that’s behind it.

But say you want to make a change. In this example, a restaurant might change their menu, or their opening hours, and the virtual agent is going to need to need to deal with that. And this is where the messy engineering work makes things really difficult.

Making one small change within the decision tree is going to have a huge knock-on effect to the rest of the architecture. So when you pay your engineers to update one thing, they end up having to update dozens, if not hundreds, of things, and you get stuck with the bill.

It’s not just restaurants that have these issues. Insurance companies make changes to their policies, subscription businesses make changes to their payment models, product businesses are constantly updating and improving their products. Building a virtual agent is costly enough in the first place, so why should businesses keep paying through the nose for constant updates?

This type of virtual agent is not based on AI. You can’t just give a ten-year-old kid a paper to read out loud and call them a neuro-scientist. In the same way, you can’t just give a chatbot a script and call it artificial intelligence.

Most companies offering commercial conversational AI solutions today are using a combination of AI and pre-scripted conversational flows to build virtual agents. It’s not necessarily a bad thing, but this type of technology requires a huge amount of data to reach an acceptable level of accuracy, and in the early days (read: months, years), is going to rely heavily on pre-scripted conversations until it has the level of data it needs to be truly intelligent.

Off the shelf understanding

At PolyAI, however, we’ve trained our technology on billions of conversations. We’ve scoured forums, Q&As, FAQs and even film scripts to teach our Encoder model the basics of conversation.

What does this mean?

Well typically, conversational AI companies need huge amounts of data from their clients in order to train AI agents. For contact centers, that’s thousands, if not millions, of conversational examples you’d need to supply to get a half decent virtual agent. Not only would you need to provide these examples, you’d have to provide them in a useful format, accurately annotated and categorised so the AI agent learns to say that right thing.

Using a pre-trained model means that PolyAI only needs a small sample of past customer service calls, or even just FAQs and knowledge bases, to train AI agents in the knowledge that’s specific to our clients. Because the Encoder model has been exposed to so much conversational data, it already understands the variation in how people express themselves, and is able to draw out the intent behind a query, regardless of the way the query is phrased.

Intent and response

Our AI assistants work on two principles: intent and response.

Intent is all about natural language understanding, value extraction and intent classification. This means that whatever customers say, the AI agent can identify what outcome the customer is expecting and extract the relevant information (e.g. order number, name, address) from wherever it occurs naturally within the conversation.

Response is all about getting the relevant answer or information the customer is seeking. Our conversational search engine scours whatever knowledge we have from the client – be it from knowledge bases, FAQs or previous chat/call logs – and ranks every possible response in terms of relevance. It can then pick out the best answer based on both the last thing the customer said, and the conversation as a whole. So if the customer mentioned a key piece of information earlier in the conversation, the AI agent remembers this and uses it when picking the right response.

This approach is much more scalable than pre-architected conversational agents. Training our technology on new information is as simple as uploading this new information to our model. We don’t need to pre-empt all the potential conversational twists and turns, we just give the model the new information and it disseminates it accordingly.

The future of AI in customer service

There’s no doubt that conversational AI is going to play a huge part in the future of customer service. The next five years will see humans and AI work together to enable companies to provide the kind of outstanding experiences that customers expect. To make the most of this new technology, leaders need to be educating themselves now so they don’t get caught out later. You can start getting to grips with the latest on conversational AI and customer service over on the PolyAI blog.

Nikola Mrkisc from PolyAI spoke at the AI Assistant Summit in London and you can watch the video presentation here.