Author: Dr. Elona Erez, Sr. Algorithm Researcher, GSI Technology

Change detection is an important task for various fields. It is often required to learn about the changes in the environment either in natural resources or in manmade structures in order to make informed decisions. For example, in the agricultural field change detection enables monitoring of deforestation, disaster assessment, monitoring of shifting cultivation and crop stress detection. In the civil field, change detection can aid in city planning. Current approaches for change detection usually follow one of two methods, either post classification analysis or difference image analysis. Satellite images typically have very high resolution. Therefore, these methods require heavy resources and are very time consuming.

The first method of post classification comparison first classifies the contents of the different images of the same scene. Then, it compares those contents in order to identify the differences. High degree of accuracy is required for the classification stage since inaccuracies may stem from errors in the classification of both images. In this work we follow an approach closer to the second approach of comparative analysis. In this approach, the difference image is constructed in order to highlight the differences between the two different images of the same scene. Then, further analysis of the difference image is carried out in order to determine the sources of the changes. The quality of the produced difference image determines the final change detection results. The atmosphere may negatively affect the reflectance values of images taken from satellites. Therefore, techniques such as radiometric correction are often applied in the difference image creation. The techniques applied for the construction of the difference image are often spectral differencing, rationing or texture rationing.

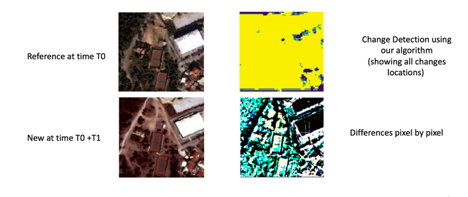

In this work, we adopt the method for the construction of difference image from [1]. In this approach a convolutional neural network is used in order the construct the difference image. Deep learning is a powerful tool for the process of highlighting differences, while avoiding the weaknesses of conventional approaches. The change between the images is detected and classified using semantic segmentation. This approach classifies the nature of the change automatically in an unsupervised manner. The model is resistant to the presence of noise in the image. Visual representations of the detected changes are built using UNET, which is a CNN designed specifically for semantic segmentation. That is, each pixel in the difference image is classified into a specific object it is part of.

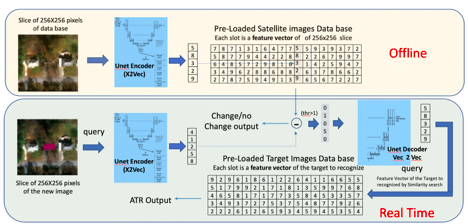

Building upon this approach from [1] that utilizes UNET for semantic segmentation of the difference image, we propose in this work similarity search in the following way. The algorithm has two stage, the first stage is carried out off-line using the first reference satellite image. The second stage is carried out at real time, upon receiving the updated new satellite image of the same scene.

In the first stage, we start by dividing the entire area of high-resolution satellite reference image into smaller blocks of size 256x256. Each such block is fed as input into the encoder part of UNET. A feature vector of each sub-area of size 256x256 is generated. For example, each sub-area may represent area of size 256mx256m for the case that the resolution is 1m. All the feature vectors are loaded into Associative Memory into the database of the reference image. Similarly, a second database is constructed offline for the target of ATR (target recognition). This database will be used for classification of the objects in the difference image. Feature vectors of the target for ATR are loaded into this second database, also from the output of the encoder of UNET. For example, if in our task we aim at classifying different vehicle types, the database may include feature vectors of SUV, van, sedan, bus, taxi, etc.

These feature vectors are generated by feeding into UNET a block of satellite image of size 256x256 of the particular object we wish to classify, in our example, there would be an image (one or more) of sedan, SUV, van, etc. The classification accuracy is expected to improve, if we have more feature vectors in the database for each object type that we want to classify. But even if we have just one example for each object the system is still expected to work using zero shot. This is unlike conventional approaches of deep learning that require many examples of each class.

In the second real time stage, UNET encoder is run on the slices of size 256x256 of the second image arriving from the satellite. The output of the UNET encoder is the second (new) feature vector, which is the query for similarity search. In order to find the related slice in the reference image, the database of the reference image is searched using cosine similarity search or L2 distance search from the nearest feature vector. The slice found in the search with minimum distance is assumed to be the related slice in the reference image. The chosen feature vector is subtracted from the second feature vector. Then, the difference feature vector of the difference image is computed using the following method. For each feature, if the difference between the chosen feature vector and the second feature vector is below a certain threshold, that feature is set to zero in the difference feature vector. If the difference (in absolute value) is above a certain value, then that feature is set to the value of the feature in the new (second) vector. The difference vector is fed as input to the decoder of UNET decoder. At the output of the decoder, the semantic segmentation classifies each object to yes/no: yes, if that pixel belongs to a changed object in the new image or no if the pixel belongs to the same object as in the reference image. For each new object, the feature vector of the pixel is used as query for the second database for the target of ATR. Similarity search over the second database is used in order to classify the target.

[1] Jong, K.L.D.; Bosman, A.S. Unsupervised Change Detection in Satellite Images Using Convolutional Neural Networks. Available online: https://arxiv.org/abs/1812.05815?context=cs.NE (accessed on 22 February 2019).