Author Bio: Abhishek Gupta, Founder of Montreal AI Ethics Institute and Machine Learning Engineer at Microsoft

2019 was the year that the world at large woke up to the importance of responsible AI [1] as the proliferation of AI increased across a variety of domains. An often cited use case is how biases can creep into the processing of loan and credit applications [2] and disproportionately harm the already marginalized populations. But what's evaded further analysis and discussion are the many other places where interaction with AI based systems in the world of finance might cause harm. Additionally, and perhaps even more importantly, there has been a lack of concrete actions that can be taken to mitigate these harms. There are a plethora of guidelines and sets of principles [3] yet they lack in being actionable and often embody a narrow, technically focused approach which doesn't do justice to the multidimensional nature of the problems. Or even when some of them take an interdisciplinary stance, they lack sufficient concreteness to actually be put into practice.

Let's start by looking at a few other cases where financial services and products might have negative outcomes, intended or unintended, because of the utilization of AI-enabled automation.

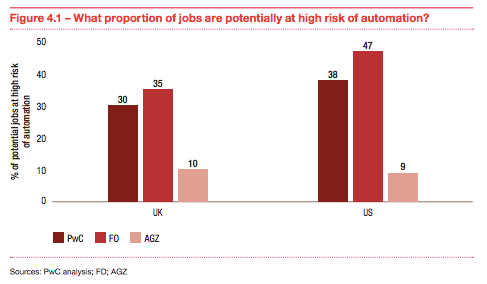

There are labor impact consequences that arise from automation and a number of reports mention stats like “32% of the financial sector jobs in the UK are at high risk of automation” [4] and “Bridgewater looking to cut jobs in view of automation” [5]. With increasing capabilities of AI-enabled automation, there is no doubt that it's a way to boost the bottom line while eking out the last ounce of productivity gains. This also provides opportunities for employees to focus more on tasks within their jobs that are more meaningful to them, have learning and growth opportunities and provide them with a greater sense of purpose. But, it comes with an inevitable caveat that there would be fewer people required in that role and hence some job losses might be unavoidable.

What's important in utilizing automation, from the employer's perspective, is to create complementary retraining and upskilling programs that can help employees transition effectively as automation becomes more widely used. An approach that might be fruitful is to run a company wide survey asking employees which parts of their jobs they think can be automated and then strengthening their skills in other areas so they can embrace automation rather than have to fight it.

Customer service automation, increasingly being adopted in light of emerging fintech firms challenging the incumbents’ via online offerings, has had its fair share of problems. As an example, the use of voice recognition systems to replace IVR telephone systems runs into concerns with recognizing accents aside from those of native English speakers from the West. Accuracy suffers even more for languages other than English because of lack of sufficient training data for the AI systems, further entrenching the gap between the quality of service offered to clients that hail from different backgrounds and often minorities.

Some banks have chosen to automate their FAQ sections via chatbots in an attempt to reach younger demographics at their place of online use via interfaces that they are familiar with, namely messaging services and social media platforms. While they are laudable efforts, they suffer again from consequences of inefficient automation, promising more than they can deliver. Specifically, they often lack in their capability to understand the use of a language apart from the standard as set by native speakers, primarily because those are the dominant paradigms captured in the AI models via the training data.

In cases where older means are fully replaced with these modern interfaces, there is a significant barrier faced by those who are newly onboarding to financial services in the digital medium. So what can be done to address some of these issues?

For starters, having more representative teams is useful in spotting gaps in the training data that might not be obvious to a homogeneous team. Diversity not just to check off a box, but one that truly captures the potential market to be served by the product or service and striving to incorporate feedback from those team members who have a deeper and contextual understanding of the minority communities that might be served by the system. There are now a variety of technical tools available that help to analyze potential biases in datasets [6] [7] but these need to be paired with domain expertise, often with the help of social scientists, who can bring depth and context which in combination with technical tools yield the best shot at mitigating issues. The way to approach it is to use systems thinking [8] to capture the fact that technology is deployed in a larger social and political ecosystem.

Additionally, when utilizing strategies from systems thinking, it forces one to reason through stakeholders that are otherwise invisible in the design and development of these systems. At the Montreal AI Ethics Institute (MAIEI) [9], we've been practicing and advocating for a participatory design process in building ethical, safe and inclusive AI systems. A similar approach can be adopted by corporations in their product development life cycle where they use a consultative and open focus group. Another aspect of the work at MAIEI has been increasing education and awareness of ethics in AI which is still not widely imparted as a part of technical programs at higher education institutions. While that requires recognition from them, corporations can utilize publicly available resources and training programs to build up competence in ethics for their employees which would translate into products and services that serve their customers more fully.

AI-enabled automation presents a remarkable opportunity to create novel solutions in the finance industry and bring gains to both those in the employ of the industry and those that interact with it. Yet it comes with a set of challenges as the other side of the coin and these challenges must be embraced whole-heartedly. Thankfully, we have dealt with ethics, safety and inclusivity issues in the past with other technology deployments and while certainly there are novel concerns, we can lean on some lessons learned from the past and other fields to inform how AI systems can be designed, developed and deployed such that they bring the highest amount of benefit to our communities.

References :

[1] https://www.technologyreview.com/s/614992/ai-ethics-washing-time-to-act/

[2] https://www.brookings.edu/research/credit-denial-in-the-age-of-ai/

[3] http://www.linking-ai-principles.org/principles

[4] https://www.pwc.co.uk/economic-services/ukeo/pwcukeo-section-4-automation-march-2017-v2.pdf

[6] https://github.com/tensorflow/fairness-indicators

[7] https://pair-code.github.io/what-if-tool/

[8] https://www.goodreads.com/book/show/3828902 [9] https://montrealethics.ai/meetup/

Author Bio:

Abhishek Gupta is the founder of Montreal AI Ethics Institute (https://montrealethics.ai ) and a Machine Learning Engineer at Microsoft where he serves on the CSE AI Ethics Review Board. His research focuses on applied technical and policy methods to address ethical, safety and inclusivity concerns in using AI in different domains. He has built the largest community driven, public consultation group on AI Ethics in the world that has made significant contributions to the Montreal Declaration for Responsible AI, the G7 AI Summit, AHRC and WEF Responsible Innovation framework and the European Commission Trustworthy AI Guidelines. His work on public competence building in AI Ethics has been recognized by governments from North America, Europe, Asia and Oceania. More information on his work can be found at https://atg-abhishek.github.io

Want to hear more from Abhishek? You can access his MAIEI newsletter sign up form here.