See part two with new experts here.

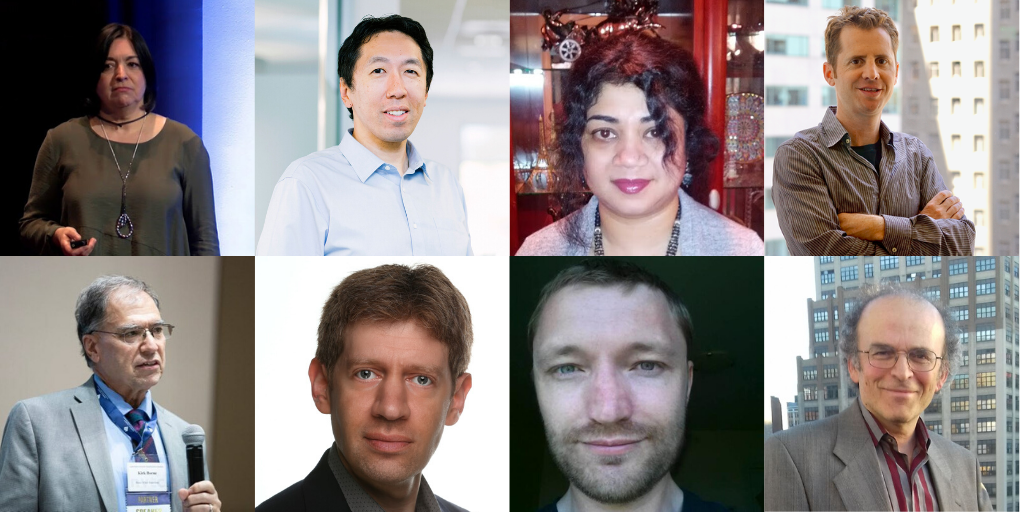

After the 'top AI books' reading list was so well received, we reached out to some of our community to find out which papers they believe everyone should have read!

All of the below papers are free to access and cover a range of topics from Hypergradients to modeling yield response for CNNs. Each expert also included a reason as to why the paper was picked as well as a short bio.

Rather listen to your AI fix? Our top AI podcast list can be found here.

Jeff Clune, Research Team Lead at OpenAI

We spoke to Jeff back in January and at that time he couldn't pick just one paper as a must-read, so we let him pick two. Both papers are listed below:

Learning to Reinforcement Learn (2016) - Jane X Wang et al

This paper unpacks two key talking points, the limitations of sparse training data and also if recurrent networks can support meta-learning in a fully supervised context. These points are addressed in seven proof-of-concept experiments, each of which examines a key aspect of deep meta-RL. We consider prospects for extending and scaling up the approach, and also point out some potentially important implications for neuroscience. Read more here.

Gradient-based Hyperparameter Optimization through Reversible Learning (2015) - Dougal Maclaurin, David Duvenaud, and Ryan P. Adams.

The second paper recommended by Jeff computes exact gradients of cross-validation performance with respect to all hyperparameters by chaining derivatives backwards through the entire training procedure. These gradients allow the optimization of thousands of hyperparameters, including step-size and momentum schedules, weight initialization distributions, richly parameterized regularization schemes, and neural network architectures. You can read more on this paper here.

Shalini Ghosh, Principal Scientist (Global) and Leader of Machine Learning Research Team, Smart TV division, Samsung Research America

Long Short-Term Memory (1997) - Sepp Hochreiter and Jürgen Schmidhuber

This (paper) was a seminal paper in 1997, with ideas that were ahead of its time. It's only in recent times (e.g., last 6 years or so) that the hardware accelerators have been able to run the training/serving operations of LSTMs, which led to LSTMs being used successfully for many applications (e.g., language modeling, gesture prediction, user modeling). The memory-based sequence modeling architecture of LSTMs has been very influential -- it has inspired many recent refinements, e.g., Transformers. This paper has influenced my work heavily. You can read more on this paper here.

Efficient Incremental Learning for Mobile Object Detection (2019) - Dawei Li et al

This paper discusses a novel variant of the popular object detection model RetinaNet, and introduces a paradigm of incremental learning that is useful for this and other applications of multimodal learning. The key ideas and incremental learning formulation used in this paper would be useful for anyone working on computer vision, and could pave the path for future innovation in efficient incremental algorithms that are effective for mobile devices. You can read more on this paper here.

Kenneth Stanley, Charles Millican Professor (UCF) and Senior Research Manager, Uber

Emergent Tool Use From Multi-Agent Autocurricula (2019) - Bowen Baker et al

Ken chose this paper as it gives a unique example of emergent behaviours with a hint of the beginnings of open-endendness. The paper itself finds clear evidence of six emergent phases in agent strategy in our environment, each of which creates a new pressure for the opposing team to adapt; for instance, agents learn to build multi-object shelters using moveable boxes which in turn leads to agents discovering that they can overcome obstacles using ramps. Read more from this paper here.

Open-endedness: The last grand challenge you’ve never heard of (2017) - Kenneth Stanley et al

We allowed Ken to also include a paper from himself and his colleagues, with his suggestion being "A non-technical introduction to the challenge of open-endedness". This paper in description explains just what this challenge is, its amazing implications if solved, and how to join the quest if we’ve inspired your interest. Read more on this paper here.

Andriy Burkov, Director of Data Science, Gartner

Attention Is All You Need (2017) - Ashish Vaswani et al

Andriy recommended this 2017 paper as, in his own words, "It brought NLP to an entirely new level with pre-trained Transformer models like BERT". The paper proposes a new simple network architecture, the Transformer, based solely on attention mechanisms, dispensing with recurrence and convolutions entirely. Experiments on two machine translation tasks show these models to be superior in quality while being more parallelizable and requiring significantly less time to train. You can read this paper here.

Andrew NG, Founder and CEO of Landing AI; Founder of deeplearning.ai

When we reached out to Andrew, there was no specific paper which came to mind, however, we were directed to a recent post of his which highlighted two papers that he believed could be of interest. both papers are cited below.

Modeling yield response to crop management using convolutional neural networks (2020) - Andre Barbosa et al.

In this work, Andre et al propose a Convolutional Neural Network (CNN) to capture relevant spatial structures of different attributes and combine them to model yield response to nutrient and seed rate management. Nine on-farm experiments on corn fields are used to construct a suitable dataset to train and test the CNN model. Four architectures combining input attributes at different stages in the network are evaluated and compared to the most commonly used predictive models. Read more on the paper here.

A comparison of deep learning performance against health-care professionals in detecting diseases from medical imaging: a systematic review and meta-analysis (2019) - Xiaoxuan Liu et al

This paper evaluates the diagnostic accuracy of deep learning algorithms versus health-care professionals in classifying diseases using medical imaging. Studies undertaking an out-of-sample external validation were included in a meta-analysis, using a unified hierarchical model. Read more on this paper here.

Gregory Piatetsky-Shapiro, Data Scientist, KDnuggets President

When we reached out to Gregory, he suggested that his paper choices are based on trying to understand the big trends on AI and ML, with two recent papers really standing out for him. "Two important papers I read recently are the below from Gary & Francois. I also recommend watching the debate between Yoshua Bengio and Gary Marcus in Montreal for the former".

The Next Decade in AI: Four Steps Towards Robust Artificial Intelligence (2020) - Gary Marcus

This paper covers recent research in AI and Machine Learning which has largely emphasized general-purpose learning and ever-larger training sets and more and more compute. In contrast to this, Gary proposes a hybrid, knowledge-driven, reasoning-based approach, centered around cognitive models, that could provide the substrate for a richer more robust AI than is currently possible.

On the Measure of Intelligence (2019) - François Chollet

Gregory's second suggestion was François Chollet's 'On The Measure of Intelligence'. The paper summarizes and critically assess the definitions and evaluation approaches of measuring intelligence, while making apparent the two historical conceptions of intelligence that have implicitly guided them. François then articulates a new formal definition of intelligence based on Algorithmic Information Theory, describing intelligence as skill-acquisition efficiency and highlighting the concepts of scope, generalization difficulty, priors, and experience. Read the paper here.

Myriam Cote, Consultant

Tackling climate change with Machine Learning (2019) - David Rolnick, Priya L Donti, Yoshua Bengio et al.

Myriam's suggestion covers Machine Learning and the affect which it can have on the environment. Climate change is one of the greatest challenges facing humanity, as machine learning experts are wondering how they can help. In this paper, the authors describe how machine learning can be a powerful tool in reducing greenhouse gas emissions and helping society adapt to a changing climate. From smart grids to disaster management, they identify high impact problems where existing gaps can be filled by machine learning, in collaboration with other fields. Read more on the paper here.

Kirk Borne, The Principal Data Scientist & Data Science Fellow, and Executive Advisor at Booz Allen Hamilton

The Netflix Recommender System: Algorithms, Business Value, and Innovation (2015) - Carlos Gomez-Uribe & Neil Hunt.

"This paper is a few years old and not particularly technical, but it covers a lot of fundamental issues, business decision points, algorithmic characteristics, metrics, and data features that one must think about, test, and validate before, during, and after deploying an AI algorithm in an operational environment. I also like this paper because recommender engines are popular, are used in many different industries, and are well recognized by everyone (even non-experts) -- consequently, this paper can quickly bring students (and others) into a deeper, richer understanding of algorithms and their opportunities for fun and profit. Read the paper here.

Interested in reading more leading AI content from RE•WORK and our community of AI experts? See our most-read blogs below:

Top AI Resources - Directory for Remote Learning

10 Must-Read AI Books in 2020

13 ‘Must-Read’ Papers from AI Experts

Top AI & Data Science Podcasts

30 Influential Women Advancing AI in 2019

‘Must-Read’ AI Papers Suggested by Experts - Pt 2

30 Influential AI Presentations from 2019

AI Across the World: Top 10 Cities in AI 2020

Female Pioneers in Computer Science You May Not Know

10 Must-Read AI Books in 2020 - Part 2

Top Women in AI 2020 - Texas Edition

2020 University/College Rankings - Computer Science, Engineering & Technology

How Netflix uses AI to Predict Your Next Series Binge - 2020

Top 5 Technical AI Presentation Videos from January 2020

20 Free AI Courses & eBooks

5 Applications of GANs - Video Presentations You Need To See

250+ Directory of Influential Women Advancing AI in 2020

The Isolation Insight - Top 50 AI Articles, Papers & Videos from Q1

Reinforcement Learning 101 - Experts Explain

The 5 Most in Demand Programming Languages in 2020