Due to the overwhelming response to our previous expert paper suggestion blog, we had to do another. We asked some of our expert community the papers they would suggest everybody read when working in the field.

Haven't seen the first blog? You can read the recommendations of Andrew Ng, Jeff Clune, Myriam Cote and more here.

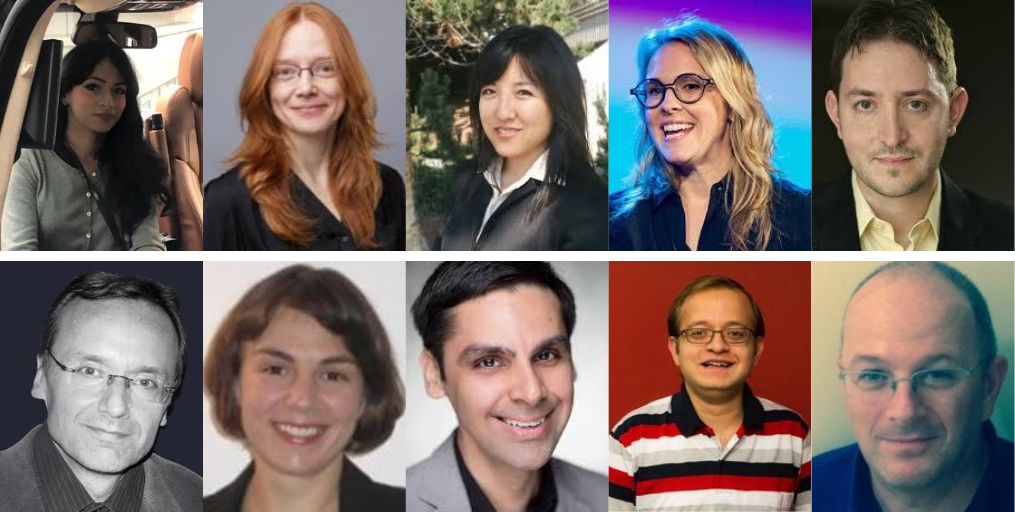

Alexia Jolicoeur-Martineau, PhD Researcher, MILA

f-GAN: Training Generative Neural Samplers using Variational Divergence Minimization - Sebastian Nowozin et al.

https://arxiv.org/pdf/1711.04894.pdf

Alexia suggested this paper as it explains how many classifiers can be thought of as estimating an f-divergence. Thus, GANs can be interpreted as estimating and minimizing a divergence. This paper from Microsoft Research clearly maps the experiments undertaken, methods and related work to support. Read this paper here.

Sobolev GAN - Youssef Mroueh et al.

https://arxiv.org/pdf/1711.04894.pdf

This paper shows how the gradient norm penalty (used in the very popular WGAN-GP) can be thought of as constraining the discriminator to have its gradient in a unit-ball. The paper is very mathematical and complicated, but the key message is that we can apply a wide variety of constraints to the discriminator/critic. These constraints help prevent the discriminator from becoming too strong. I recommend focusing on Table 1, which shows the various different constraints that can be used. I have come back many times to this paper just to look at Table 1. You can read this paper here.

Jane Wang, Senior Research Scientist, DeepMind

To be honest, I don't believe in singling out any one paper as being more important than the rest, since I think all papers build on each other, and we should acknowledge science as a collaborative effort. I will say that there are some papers I've enjoyed reading more than others, and that I've learned from, but others might have different experiences, based on their interest and background. That said, I've enjoyed reading the following:

Where Do Rewards Come From? - Satinder Singh et al.

https://all.cs.umass.edu/pubs/2009/singh_l_b_09.pdf

This paper advances a general computational framework for reward that places it in an evolutionary context, formulating a notion of an optimal reward function given a fitness function and some distribution of environments. Novel results from computational experiments show how traditional notions of extrinsically and intrinsically motivated behaviors may emerge from such optimal reward functions. You can read this paper here.

Building machines that learn and think like people - Brenden Lake et al

This paper reviews progress in cognitive science suggesting that truly human-like learning and thinking machines will have to reach beyond current engineering trends in both what they learn and how they learn it. Specifically, we argue that these machines should (1) build causal models of the world that support explanation and understanding, rather than merely solving pattern recognition problems; (2) ground learning in intuitive theories of physics and psychology to support and enrich the knowledge that is learned; and (3) harness compositionality and learning-to-learn to rapidly acquire and generalize knowledge to new tasks and situations. Read more on this paper here.

Jekaterina Novikova, Director of Machine Learning, WinterLight Labs

Attention Is All You Need - Ashish Vaswani et al

https://arxiv.org/abs/1706.03762

Novel large neural language models like BERT or GPT-2/3 were developed soon after NLP scientists realized in 2017 that "Attention is All You Need". The exciting results produced by these models caught the attention of not just ML/NLP researchers but also the general public. For example, GPT-2 caused almost mass hysteria in 2019 as a model that is "too dangerous to be public" as it can potentially generate fake news indistinguishable from real news articles. The GPT-3, which was only released several weeks ago, has already been called "the biggest thing since bitcoin". You can read this paper here.

Climbing towards NLU: On Meaning, Form, and Understanding in the Age of Data - Emily M. Bender et al.

https://www.aclweb.org/anthology/2020.acl-main.463.pdf

To outweigh the hype, I would recommend everyone to read a great paper that was presented and recognized as the best theme paper at the ACL conference in the beginning of July 2020 - "Climbing towards NLU: On Meaning, Form, and Understanding in the Age of Data". In the paper, the authors argue that while the existing models, such as BERT or GPT, are undoubtedly useful, they are not even close to human-analogous understanding of language and its meaning. The authors explain that understanding happens when one is able to recover the communicative intent of what was said. As such, it is impossible to learn and understand language if language is not associated with some real-life interaction, or in other words - "meaning cannot be learned from form alone". This is why even very large and complex language models can only learn a "reflection" of meaning but not meaning itself. Read more on the paper here.

Eric Charton, Senior Director, AI Science, National Bank of Canada

The Computational Limits of Deep Learning - Johnson et al

https://arxiv.org/abs/2007.05558

This recent paper from MIT and IBM Watson Lab is a meta-analysis of DL publications highlighting the correlation between increase of computational consumption to train DL models and performances evolution. It also states the fact that performances progress is slowing as computation capacities increase. You can read more on this paper here.

Survey on deep learning with class imbalance. Journal of Big Data, 6(1), 27.

https://link.springer.com/article/10.1186/s40537-019-0192-5

This suggestion is an exhaustive paper about how the class imbalance problem (present in many industrial applications like credit modelling, fraud detection or medical like cancer detection) is handled by DL algorithms. The survey concludes with a discussion that highlights various gaps in deep learning from class imbalanced data and open multiple tracks for future research. You can read more on this paper here.

Anirudh Koul, Machine Learning Lead, NASA

The one silver lining of 2020 will be the revolution of self-supervision, aka pretraining without labels, and then fine-tuning for a downstream task with limited labels. The state of the art metrics have been shattered more times than the months so far this year. PIRL, SimCLR, InfoMin, MOCO, MOCOv2, BYOL, SwAV, SimCLRv2 are just a few well-known names from this year (some serious FOMO), and that is by June 2020. To admire the current state of the art, pretraining on ImageNet without labels, and then fine-tuning with 1% labels, SimCLRv2 models are able to achieve 92.3% Top-5 accuracy on ImageNet dataset. Yup, with just 1% labels. This has huge practical applications on datasets with way more data than labels (think medical, satellite, etc).

A Simple Framework for Contrastive Learning of Visual Representations - Ting Chen et al

https://www.aclweb.org/anthology/2020.acl-main.463.pdf

Great papers don't just have exceptional results and rigorous experimentation, they also convey their key thoughts in a simple manner. And luckily, SimCLR has simple in its very name, putting it among the first papers worth reading in the area of contrastive learning. Among many learnings, it shows the critical role of data augmentation strategies during contrastive learning specific to your dataset domain to obtain a better representation of images. I expect many papers and tools inspired by SimCLR to come up in the future, addressing X-Rays, MRIs, audio, satellite imagery, and more.

Oana Frunza, Vice President, NLP and ML Researcher at Morgan Stanley

Revealing the Dark Secrets of BERT - Olga Kovaleva et al.

https://arxiv.org/abs/1908.08593

The BERT Transformer architecture allowed for textual data representation and understanding to reach new levels. It created the ImageNet moment for the NLP community. The Transformer architecture draws its power from the self-attention mechanism. In the “Revealing the Dark Secrets of BERT” paper, the authors shed light onto what’s happening under the hood of an attention mechanism.

More precisely, the study quantifies linguistic information like syntactic roles and semantic relations which are captured by the attention heads. It also investigates the diversity of the self-attention patterns and the impact they have on various tasks.

The paper shifts the focus to understanding these powerful architectures that we are so excited to use. This is important not only for moving the field forward, but also for providing researchers with more informed decision-making powers. Better decisions in terms of architecture and footprint impact can be made if one knows that a particular smaller and distilled architecture would yield similar performance.

Read more on this paper here.

Tamanna Haque, Senior Data Scientist at Jaguar Land Rover

Deep Learning with R - François Chollet et al.

This paper gives a useful understanding of neural networks, from concept through to valuable uses in practice. Neural networks are considered expensive and 'mysterious’ due to their black-box nature, and recent changes to data protection regulations have shifted a favourable disposition towards explainable AI.

Still, there’s business cases where only neural networks can do the job effectively, so they are worth knowing about to ensure technical readiness. This paper put me in good stead a year after I studied it (practically with R), where I had to onboard on an image recognition project quickly and use neural networks expertly.

Mike Tamir, Chief ML Scientist & Head of Machine Learning/AI at SIG, Data Science Faculty-Berkeley

Right for the Wrong Reasons: Diagnosing Syntactic Heuristics in Natural Language Inference

First, I'd suggest "Right for the Wrong Reasons: Diagnosing Syntactic Heuristics in Natural Language Inference" by MaCoy, Pavlick and Linzen: Transformer based architectures have driven remarkable advances in solving NLU tasks like Natural Language Inference. Without discouraging the promise of these techniques, "Right for the Wrong Reasons" highlights important vulnerabilities to experimental methodology in Deep NLU research based on systematic flaws in current data sets. An important read for practitioners and researchers to keep our sobriety. You can read this paper here.

Emergence of Invariance and Disentanglement in Deep Representations

Second, I've been interested in vindications of information bottleneck analyses of DL recently. To this end I want to put in a plug for Achille and Soatto's research: "Emergence of Invariance and Disentanglement in Deep Representations" in particular deserves more attention. You can read more on this blog here.

Abhishek Gupta, Founder, Montreal AI Ethics Institute

https://montrealethics.ai/the-state-of-ai-ethics-report-june-2020/

The State of AI Ethics Report

At the Montreal AI Ethics Institute, we've been keeping tabs on some of the most impactful papers that move beyond the familiar tropes of issues in ethical AI to ones that are rooted in empirical science and strongly biased towards action. To that end, we have done a lot of work curating that for the past quarter and have assembled it into the State of AI Ethics June 2020 report which is a great way for researchers and practitioners alike in the field to quickly catch up on some of the most relevant developments in the space of building responsible AI. Highlights from the report include Social Biases in NLP models as barriers for persons with disabilities, The Toxic Potential of YouTube's Feedback Loop, AI Governance: A Holistic Approach to implementing ethics in AI, and Adversarial Machine Learning - Industry Perspectives.

Jack Brzezinski, Chief AI Scientist, AI Systems and Strategy Lab

The Discipline of Machine Learning

http://www.cs.cmu.edu/~tom/pubs/MachineLearning.pdf

This document provides a brief and personal view of the discipline that has emerged as Machine Learning, the fundamental questions it addresses, its relationship to other sciences and society, and where it might be headed.

You can see more on the paper here.

Diego Fioravanti, Machine Learning Engineer, AIRAmed

Overfitting

https://en.m.wikipedia.org/wiki/Overfitting

A general overview of 'Overfitting' was the suggestion from Diego. This short descriptive text covers statistical inference, regression and more. Read the article and download a PDF Version here.

Interested in hearing more from our AI industry experts? We are hosting live digital talks with over 50 experts in just three weeks time. See more on this here.

Interested in reading more leading AI content from RE•WORK and our community of AI experts? See our most-read blogs below:

- Experts predict the next AI Hub

- Experts predict next roadblocks in AI

- Top AI Resources - Directory for Remote Learning

- 10 Must-Read AI Books in 2020

- 13 ‘Must-Read’ Papers from AI Experts

- Top AI & Data Science Podcasts

- 30 Influential Women Advancing AI in 2019

- ‘Must-Read’ AI Papers Suggested by Experts - Pt 2

- 30 Influential AI Presentations from 2019

- AI Across the World: Top 10 Cities in AI 2020

- Female Pioneers in Computer Science You May Not Know

- 10 Must-Read AI Books in 2020 - Part 2

- Top Women in AI 2020 - Texas Edition

- 2020 University/College Rankings - Computer Science, Engineering & Technology

- How Netflix uses AI to Predict Your Next Series Binge - 2020

- Top 5 Technical AI Presentation Videos from January 2020

- 20 Free AI Courses & eBooks

- 5 Applications of GANs - Video Presentations You Need To See

- 250+ Directory of Influential Women Advancing AI in 2020

- The Isolation Insight - Top 50 AI Articles, Papers & Videos from Q1

- Reinforcement Learning 101 - Experts Explain

- The 5 Most in Demand Programming Languages in 2020