AI is an extremely diversified field, with various subsets under its umbrella, including Machine Learning, Deep Learning, and Reinforcement Learning to name but a few. Over the past 18 months, many experts have suggested that the development of Reinforcement Learning, although holds huge potential, is still very much in its infancy. To breakdown its potential, we've gathered experts in the field to share their thoughts on what the upcoming years could hold. The content below was gathered from members of the RE•WORK community including Lex Fridman of MIT, Thomas Simonini of UnityML Course, Doina Precup of UoM and more.

TL;DR:

- Extrinsic reward for an RL agent is not scalable due to the need for human coding

- Arcade Learning Environments play an important role in development by allowing the systematic comparison of different algorithms

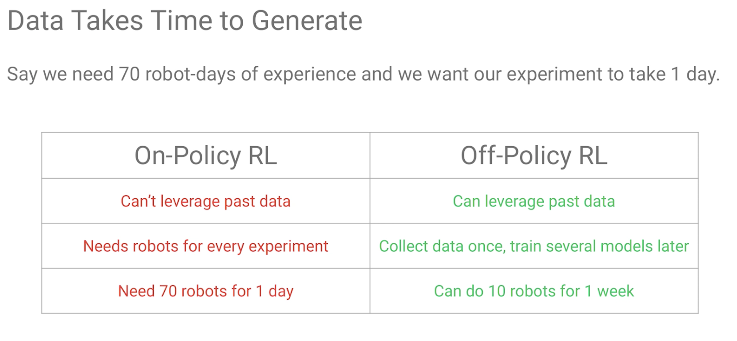

- Data Generation takes time! On-Policy RL cannot leverage past data & needs huge mechanical resource for each experiment

- The role of RL lies in being a tool for building knowledge representations in AI agents whose goal is to perform continual learning

- What are the main things we could see over the next few years? RL development in robotics, Human Behaviour Development & Self-play, one of the most important things in AI, and a really powerful idea, which can explore objectives and behaviours

No time to read? Video gallery links in footer.

Firstly, let me explain what Reinforcement Learning (RL), actually is. Recognised as a subset of Machine Learning (ML), RL, is a technique used to aid the development of 'learning' in an environment, through the process of trial and error. This in turn, uses 'feedback' to correct itself. It should also be referenced at this point that alongside RL there are two other prevalent learning methods under ML, including supervised and unsupervised, the details of which were best explained on Towards Data Science here.

Supervised learning tasks find patterns where we have a dataset of “right answers” to learn from. Unsupervised learning tasks find patterns where we don’t. This may be because the “right answers” are unobservable, or infeasible to obtain, or maybe for a given problem, there isn’t even a “right answer” per se.

Unlike the above whereby feedback provided to the agent is a correct set of actions for performing a task, Reinforcement Learning uses rewards and punishment as signals for positive and negative behavior, with the goal to find a suitable action model that would maximize the total cumulative reward of the agent.

You with me?

“Reinforcement learning allows autonomous agents to learn how to act in a stochastic, unknown environment, with which they can interact.”

A few months ago, we welcomed Doina Precup to speak on RL, at which time she suggested that the role of RL lies in being a tool for building knowledge representations in AI agents whose goal is to perform continual learning. Doina discussed Reinforcement Learning as something which is inspired by animal learning, that is, an agent which is interacting with an environment with reward functions made available to those who succeed and negative rewards for those which are not successful. Doina was clear in breaking the stereotype that RL could only be used for data driven tasks when she quipped on the idea of Poutine based robotics (we were in Canada to be fair).

Whilst the audience at that time laughed, there was some logic behind this statement, with Doina suggesting that from the viewpoint of advancing AI, this would be a great task. How you ask? Well, whilst we do not see cooking as a benchmark for intelligence, it requires regular troubleshooting, an advancement of knowledge and the ability to interact with an environment which is far less structural than those shown in video games. The underlying point? That we can use RL not just to learn simple problem solving skills, but to build knowledge which can be applied to wider societal tasks. Further still, Doina suggested that the procedural knowledge capability of alphaGO can only complete an action against a current state and value function which shows estimation of expectation against long time return. This is something we want to overcome when looking forward. The next step, of course, is one of much greater difficulties, as we face the problematic creation of opinion and allowing an agent to make its own choice in tasks of greater complexity.

Junhyuk Oh, Senior Research Scientist at DeepMind has also suggested that researchers in the Deep RL area have also developed many techniques for training highly non-linear functions from noisy error signals in reinforcement learning. In addition, Junhyuk also reinforced the importance of good benchmarks like Arcade Learning Environment (Atari games) and their key role by allowing us to systematically compare different algorithms. Junhyuk suggested that the more challenging and interesting benchmarks (e.g., 3D world, language-based environment) will push the boundaries further, proving that once more, video games are a staple of AI development.

The perfect recipe for RL Algorithms?

Shane Gu, Research Scientist at Google talked us through what it takes to makeup the recipe for a smart RL system, consisting of layers, broken up at a high level into the brackets - Algorithm, Automation and Reliability. When learning a Q function, the algorithm can only 'learn' in respect to one reward. What you can do thereafter, is parameterize that word function, by off-policy learning, ultimately using the same sample to learn for an infinite number of goals.

The core level of the recipe consists of an algorithm which has sample-efficiency, stability and scalability, which, in-turn, allows for 'Human-free' learning, moving away from manual re-setting, reward engineering and human administered function toward autonomous and safe continual learning. This thereafter leads to exploration and the ability to utilise universal reward. The top layer, inside the reliability band, requires interpretability, risk awareness, transferability and temporal abstraction as part of the RL algorithm. You can see Shane Gu explain this in much greater detail here.

A different thought: Curiosity-Learning

Another aspect which we have seen discussed in relation to RL is that of Curiosity Learning. The idea, discussed by Thomas Simonini works to bypass the initial problems in regard to extrinsic reward being hard-coded by humans, making it, therefore, non-scalable. Curiosity-Learning, however, is to build a reward function that is intrinsic to the agent (generated by the agent itself). It means that the agent will be a self-learner since he will be the student but also the feedback master. How, you say? Calculations of error are done to predict consequences of poor decision-making, this is done to encourage said agent to perform actions that reduce the uncertainty in prediction of its own action (disclaimer - uncertainty will be higher in areas where the agent has spent less time, or in areas with complex dynamics).

Why is this exciting? Well, mainly due to the fact that it can aid in the problem of sparse rewards aka rewards that equal to zero. That’s the case in most video games, you don’t have a reward at each timestep. There are still problems, however, including the need for the agent to have feedback to know if its action was good or not. Using an intrinsic reward like curiosity helps to get rid of that problem (since a curiosity reward is generated at each timestep). Watch in full here.

Restrictions currently faced

During Doina's talk, it was suggested that we should not expect RL development in too much haste, mainly because we have spent that last thirty years helping agents build predictive knowledge. We must recognise that even with generalisation possible, we must focus more so on the incremental changes possible, referenced to by Doina as lego bricks, which can see smaller value functions added together over a period of time. This should be seen as a way forward which can aid in the development of groundbreaking algorithms as there is seemingly no longer just a ‘single task’. You can also see the full blog of Doina's talk here and her presentation in video form here.

Another challenge which should be recognised when looking at RL is the time it takes to generate data. This seems to be commonplace in all talks I have heard surrounding different algorithms and subsets of AI. Alex Irpan, Software Engineer at Google Brain, proved this point when walking us through both On-Policy and Off-Policy Learning. That is, with On-Policy learning, some agent interacts with the world which goes into training data assumes data coming in generated by current agent, downside of this is that you cant re-use for future on-policy. Conversely, Off-Policy learning can train with data from other agents experience and can be combined from several experiments. These off-policy don't make assumptions of where data comes from. Why do we care? Generalisation requires a lot of data, around a million examples of labelled images and 1.5 million object instances to be exact. This can also be seen in the table below and explained further by Alex here.

Along with time and data, Lex Fridman has explained that one of the bigger hurdles faced in RL is the human expectation of models. It’s still not fully understood that it can learn a function in a lean manner - it’s over parameterised but for now, we should be impressed by the current direction. With examples from autonomous driving, Lex suggested that the level of allowance for mistakes in human behaviour is not yet extended to RL machines, suggesting that a black-box style of accuracy is expected from AI. We also place far too much pressure on its development, especially when you consider that regular human mistakes are forgiven when met with excuse, however, it takes only one AI-based mishap for news articles, blogs and media outlets (mostly outside of the industry) to write-off years of development.

“There hasn’t been a truly intelligent rule-based system which is reliable (in AI), however, human beings are not very reliable or explainable either.”

You can see the full blog on Lex's intro to RL here.

What should we be mindful of going forward?

The first thing, which is key, is patience. Many are too quick to try and advance Reinforcement Learning through tinkering to develop new algorithmic possibilities without allowing RL the time to learn for itself. The second is somewhat of an open question right now. We have reached a stage at which we are weighing the value functions and understanding what the agent would like to do. How can we aggregate an agents actions? That, we’re not quite sure on yet!

As humans, we don’t want to know the truth, we simply want to understand. When this isn’t possible, ideas and developments are often rejected as futile. The remarks from Lex that DL needs to become more like the person in school who studies social rules, finding out what is cool and uncool to do/say, suggested that the openness and honesty seen in current algorithms makes it easy for problems to meet a disgruntled audience.

Segmented breakdown of Deep RL

Unsure on the difference between RL & Deep RL? I have broken it down below.

Deep Reinforcement Learning (DRL) is praised as a potential answer to a multitude of application based problems previously considered too complex for a machine. The resolution of these issues could see wide-scale advances across different industries, including, but not limited to healthcare, robotics and finance.

Deep Reinforcement Learning is the combination of both Deep Learning and Reinforcement Learning, but how do the two combine and how does the addition of Deep Learning improve Reinforcement Learning? We have heard the term Reinforcement Learning for some time, but over the last few years, we have seen an increase in excitement around DRL and rightly so. Simply put, a Reinforcement Learning agent becomes a Deep Reinforcement learning agent when layers of artificial neural networks are leveraged somewhere within its algorithm. The easiest way of understanding DRL, as cited in Skymind's guide to DRL, is to consider it in a video game setting. Before jumping headfirst down the DRL rabbit hole, I will first cover some key concepts which will be referenced throughout.

1. Agent - The agent is just as it sounds, an entity which takes actions. You are an agent, and for the benefit of this blog, Pacman is an agent. The agent itself learns by interacting with an environment, but more on this later.

2. Environment - The environment is the physical world in which the agent operates and performs, the setting of a game, for example.

3. State - The state refers to the immediate or present situation an agent finds itself in, this could be both presently or on the future. A configuration of environmental inputs which create an immediate situation for the agent.

4. Action - The action is the variety of possible decisions or movements an agent can make. The example here could be a decision needed in Pacman (I know, bare with me), in regard to moving up, down, left or right in order of avoiding losing a life.

5. Reward - The reward is the feedback, both positive and negative, which allows the agent to understand the consequences of its actions in the current environment it finds itself in.

DRL is often compared to video games due to its similarity in processes. Let me explain: imagine that you are playing your favourite video game and you find yourself easily completing all levels on medium difficulty, so you decide to step up. The chances are, the higher difficulty will cause you to regularly fail due to unforeseen obstacles which present themselves. If you’re persistent, you will learn the obstacles and challenges throughout each level, which can then be beaten or avoided the next time.

Not only this, but you would be learning each step in the hope of receiving maximum reward, be it coins, fruit, extra lives etc. Reinforcement learning works much in this way, with the particular agent evaluating it’s current state in relation to the environment, gathering feedback throughout, with positive (gaining more points in a level) and negative (losing lives and having to start again) outcomes. It is then through this that an agent learns the easiest route to victory via trial and error, all the while teaching itself the best methods for each task. This then culminates in the near perfect methodology for a task, increasing efficiency and productivity.

So, what’s all of the fuss about?

With the potential to use DRL for a range of tasks, including those which could improve the quality of hospital care and life enhancement, seen through a greater amount of patient care and regularly scheduled appointments. The increase in automation will also see the end to tedious and exhausting tasks being undertaken by humans, and if we are to believe reports, it will not stop there, with over half of today’s work activities in the automation firing line by 2055. That said, we are still at the very first steps of DRL. It must also be recognised that in its infancy, there are issues with DRL, with many claiming that it does not yet work and that whilst the hype should be recognised, there is a huge amount of further research needed. Can it solve all your problems, well, that depends who you ask, but there is no doubting the huge potential which could create wide-scale changes in the next few years!

Interested in reading more on RL & Deep RL? See some of our keynotes talks on our Video Hub here:

Towards Simplifying Supervision In Deep Meta - Abhishek Gupta, PhD Student at UC Berkeley

Off Policy Reinforcement For Real-World Robots - Alex Irpan , Software Engineer at Google Brain

Rewards, Resets, Exploration: Bottlenecks For Scaling Deep RL In Robotics - Shane Gu, Research Scientist at Google Brain

Driving Cars On UK Roads With Deep Reinforcement Learning - Alex Kendall, CTO & Co-Founder at Wayve

Secure Deep Reinforcement Learning - Dawn Song , Professor of Computer Science at UC Berkeley

POET: Endlessly Generating Increasingly Complex and Diverse Learning Environments and their Solutions through the Paired Open-Ended Trailblazer - Jeff Clune, Research Manager/ Team Lead at OpenAI

Building Knowledge For AI Agents With Reinforcement Learning - Doina Precup, Associate Professor at McGill University

Lifelong Learning For Robotics - Franziska Meier, Research Scientist at Facebook AI Research

Financial Applications of Reinforcement Learning - Igor Halperin , Research Professor of Financial Machine Learning at New York University

Learning Abstractions With Hierarchical Reinforcement Learning - Ofir Nachum, Brain Resident at Google

Learning Values And Policies From State Observations - Ashley Edwards , Research Scientist at Uber AI Labs

Reinforcement Learning in Interactive Fiction Games - Matthew Hausknecht , Researcher at Microsoft Research AI

Can Deep Reinforcement Learning Improve Inventory management - Joren Gijsbrechts, PhD Student at KU Leuven

Learning To Act By Learning To Describe - Jacob Andreas , Assistant Professor and Senior Researcher at MIT/ Microsoft Semantic Machines

What are the Key Obstacles Preventing the Progression And Application Of Deep RL Industry - Franziska Meier, Facebook AI Research ; Peter Henderson , Stanford University ; Joren Gijsbrechts, KU Leuven; Dmytro Filatov , AI Mechanic & Aimech Technologies

Deep Reinforcement Learning On Robotics By Priors - Jianlan Luo , PhD Student at UC Berkeley

Continually Evolving Machines: Learning by Experimenting - Pulkit Agrawal , Research Scientist at UC Berkeley

DRL For Robots In Extreme Environments - Alicia Kavelaars, CTO & Co-Founder at OffWorld

Learning To Reason, Summarise and Discover With Deep Reinforcement Learning - Stephan Zheng , Senior Research Scientist at Salesforce Research

Robots And The Sence Of Touch - Roberto Calandra , Research Scientist at Facebook AI Research

Interested in reading more leading AI content from RE•WORK and our community of AI experts? See our most-read blogs below:

Top AI Resources - Directory for Remote Learning

10 Must-Read AI Books in 2020

13 ‘Must-Read’ Papers from AI Experts

Top AI & Data Science Podcasts

30 Influential Women Advancing AI in 2019

‘Must-Read’ AI Papers Suggested by Experts - Pt 2

30 Influential AI Presentations from 2019

AI Across the World: Top 10 Cities in AI 2020

Female Pioneers in Computer Science You May Not Know

10 Must-Read AI Books in 2020 - Part 2

Top Women in AI 2020 - Texas Edition

2020 University/College Rankings - Computer Science, Engineering & Technology

How Netflix uses AI to Predict Your Next Series Binge - 2020

Top 5 Technical AI Presentation Videos from January 2020

20 Free AI Courses & eBooks

5 Applications of GANs - Video Presentations You Need To See

250+ Directory of Influential Women Advancing AI in 2020

The Isolation Insight - Top 50 AI Articles, Papers & Videos from Q1

Reinforcement Learning 101 - Experts Explain

The 5 Most in Demand Programming Languages in 2020