Moving models from science, where they are trained on historical data, and into productions operations for inferencing on new data requires the integration of people, business processes and technologies. At SAS we often use the phrase Analytics Lifecycle to describe the virtuous continual management of analytics from data to discovery & modeling to deployment.

As a data scientist at SAS I thought I would be talking more with customers about machine learning algorithms, what algorithms to use and how to validate the models for generalization, but customers seem to have a good handle on building models using machine learning.

Over the last decade I’ve had tons of discussions about how to score models in a production environment where they can provide value to the business. It is only once models are deployed into production that they start adding value. For example, detecting the likelihood of fraud each time a credit card is swiped or calculating the sentiment for each call at the contact center. So, I would like to share a few of the strategies to reduce some of the complexity and risk associated with deploying machine learning models.

About Model Pipelines

A model pipeline is comprised of data wrangling + feature engineering and extraction + model formulae. It may also be layered with rules. A good illustration of this is a classification model, which must include the data aggregations, joins and variable preprocessing that should also be applied along with the model for inferencing. The data preparation logic including the transformation logic is essential for scoring. This data wrangling phase – which includes defining all the transformation logic to create new features – is typically handled by a data scientist. Then, to deploy the model you must replicate the data wrangling phase. A common issue is that often, the data engineer or IT staff completes this task to integrate the model into a company’s decision support systems. Unfortunately, when there isn’t enough rigor and metadata to re-create the data wrangling phase for scoring, many of the backward data source dependencies for deriving the new scoring tables get lost. I’ve found this to be by far the biggest cause of delays in putting a model to work, and there are a few others that keep coming up. With that in mind, here are some ideas to improve the process.

Tips to Improve your Model Pipeline Process

- Think in terms of DataOps - an automated, process-oriented methodology used by analytics and data teams to improve the quality and the cycle time for data analytics.

- Ensure your scoring code is modular. It will make life easier if the language you use in your research environment matches your production environment.

- Try to generate optimal scoring logic that contains only the absolute variables and algorithmic logic to generate a score.

- Use tools that enable you to automatically capture and bind the data preparation logic including preliminary transformations with the model score code. The data engineer or IT staff responsible for deploying the model then has a blueprint for implementation.

- Make sure your machine learning platform supports other languages along with REST API endpoints.

Model Management Tools

It is important to use model management tools to validate and manage models. Many financial organizations have model validation specialists who independently validate models form compliance reasons. We are starting to see more validation specialists in other industries especially for validating model bias. They run scoring tests to check the model inputs and outputs. The exact same model is rarely deployed more than once if you establish good model management rigor. I adhere to the change-anything-changes-everything approach, acknowledging that changes in one feature or adding a new feature can cause your entire model to change.

Model management tools like SAS Model Manager often also have well-defined workflow and pre-built templates for proper model life cycle management to measure key milestones and foster more rigor and collaboration among different stakeholders. A good model management tool should also support version control with check-in and check-out capabilities, as well as lineage tracking to better understand where models are in use and support impact analysis. Analytics governance should require version control and employ formal approaches for documenting, testing, validating and retraining models to promote transparency by design.

Monitoring Model Performance

Aristotle was likely one of the first data scientists who studied empiricism by learning through observation. Like most data scientists, he saw how phenomena change over time. That is, the statistical properties of a model change over time, often through a process known as concept drift. You should monitor model decay through measures like the population stability index, ROC index and model lift. You also want to engage with the business analysts often to get feedback on how well the model is helping improve business processes. This concept, which drives the need to keep tabs on static models, certainly applies when you have machine learning models. Model dashboards that include information about the number and type of models, monitoring reports, alerts and profitability summaries help bring the people, processes and technologies together as one.

Advanced Modeling

Really advanced organizations are training models at the edge. Note, I did not say training off-line but in real-time. SAS Event Stream Processing supports many real-time models like sentiment, linear regression, and density-based clustering. I really believe one of the holy grails of machine learning is being able to orchestrate a continuous learning platform in real-time. One that can adapt as the population is changing. Another option you may want to consider is SAS Visual Data Mining and Machine Learning, which supports the end-to-end data mining and machine-learning process with a programming and comprehensive visual interface that handles all tasks in the analytical life cycle.

There are so many other facets associated with deploying and managing machine learning models. Please comment on the blog with feedback, and I’m always available for questions at [email protected].

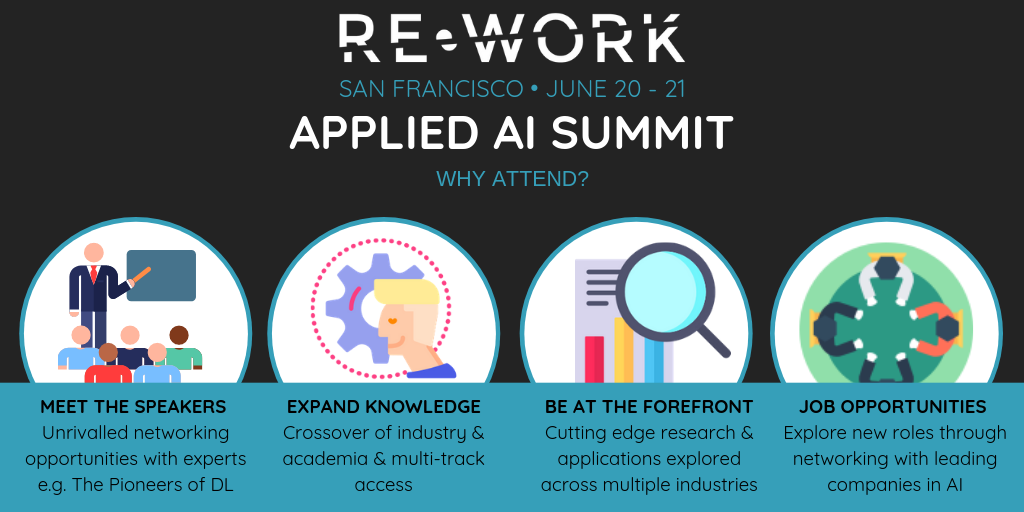

Join SAS at the Applied AI Summit in San Francisco, June 20 & 21. Early Bird Discounted tickets end this Friday, so make sure to get them here now.