TL;DR - Top 3 Takeaways:

- Ensure success by with selecting the right use cases and establishing early wins for your organization

- Define a repeatable path to production decisioning for AI models

- Design for measurability: track analytic throughput and other KPIs used to measure the productivity and ROI of your AI initiatives

Rapid advancements in computational resources and data science skills combined with reduced storage costs and massive increases in data from digital, image, voice, and sensor data sources have all driven a huge push for the adoption of machine learning within enterprises. While there is great potential to leverage these capabilities to improve and automate business decisions and customer interactions, massive amounts of technical debt are being assumed by early adopters of machine learning and artificial intelligence applications. It’s clear that the path to production is riddled with landmines when the leading whitepaper on the subject is titled:

“Machine Learning: The high-interest credit card of Technical Debt” - D. Sculley, et al.

While many of the points the Google team identified still hold true when trying to achieve enterprise-wide AI success as Google has, we’ve found 5 common challenges that must first be addressed to find early success with AI:

- Scarcity of qualified business, technical, and data science resources

- Rapidly evolving and fragmented ML technology

- Difficulty migrating AI initiatives from the lab into production environments

- Data silos that prevent cross company customer insights

- High implementation costs and time to value for AI initiatives

When we begin to map these challenges to where they fall within the AI development lifecycle, we find something very interesting. As much time and energy is focused on building and validation quality models, these 5 problems don’t really occur during that central phase of the AI lifecycle - they happen at the very beginning and the very end of it.

The Beginning:

Many AI initiatives are doomed to fail before they even truly begin with the selection of unrealistic or lab-focused research based use cases. At Quickpath, we defined a “Phase 0” step in our AI development lifecycle to brainstorm, vett, socialize, and prioritize potential use cases in order to determine viability, complexity, and business impact before pursuing them.

There are multiple causes for early failures in the machine learning space, but in the Quickpath leadership teams’ nearly 20 years of experience and over 100 customer implementations in the data and analytics space, we’ve found that there are 4 critical success factors to machine learning success at scale. We’ve developed a scorecard based on four critical dimensions of execution readiness:

1) Business readiness: Is the business bought into and ready to adopt data-driven, automated decisioning?

2) Data readiness: Is the right data available in a timely manner and of high enough quality to understand and develop analytic solutions?

3) Analytic readiness: Does the data science team have experience in the proper analytic techniques to develop a solution?

4) Technical readiness: Does the technical infrastructure support the data, analytic, and integration requirements to implement and manage Machine Learning solutions?

There’s a series of questions to be answered and assessed for each dimension, with scores ranging from 1-5, with a 1 meaning no capability or purely manual, ad-hoc maturity and a 5 meaning that it’s fully mature and ready to support real-time automation and integration. Ultimately though, the scorecard is working to quantify one simple question: “Is the pearl worth the dive?” All too often, the same intellectual curiosity that many data scientists honed during years of their graduate level academic research betrays them when placed into the business world of measurable ROIs, rapidly changing environments, and impactful time to value propositions.

And the End:

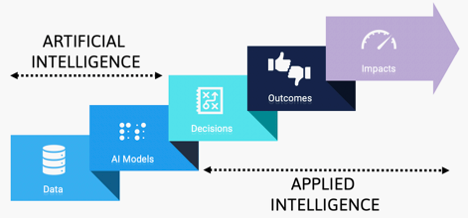

Often referred to as “the last mile” problem within the AI development lifecycle, moving models from the offline, analytic environments in which they are developed and validated into production is still the great white whale that eludes many data science teams and their IT counterparts. At Quickpath, we refer to the later half of the AI development lifecycle as “Applied Intelligence".

It’s in the applied intelligence phase that meaningful business impact is achieved from an AI initiative. A quality model is merely an academic exercise until it is moved to production and integrated into a customer interaction or business decision. But once that model is operationalized and responses are captured and measured using feedback loops and performance monitoring, we’ve achieved increased revenues, decreased operating costs, and average 7-10X returns on investments.

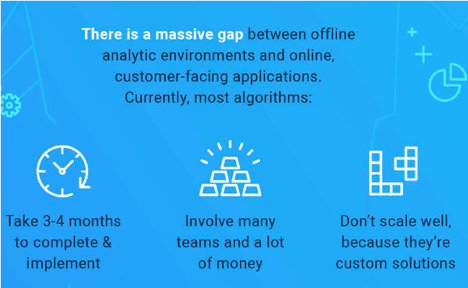

We’ve discovered a critical flaw in most companies’ production methods. Data scientists may design promising new models in two weeks, but it takes the better portion of a year to actually integrate these models into business practice. The result is a bulky, expensive, and frustrating process culminating in a perpetual technical debt caused by quick-fixes and inefficiencies. There are gaps between the AI lab and production dealing with people, process, technology, and data. The “handshake” that is supposed to occur between data science and IT teams more often resembles a fist fight.

One of the primary challenges faced by organizations trying to adopt operational machine learning is the disparity between data contained in the offline analytic environments used to build and train models and the online production environments intended to leverage them. On one end of the AI factory, is the dizzying collection of platforms to wrangle data and build and validate models (Python, H2O, TensorFlow, R, Scikit-Learn, Keras, SAS®, Openface, Caffe2, Watson, Google, Azure, AWS ML cloud APIs, and the list goes on). On the Applied Intelligence end of the factory, you have the final outcomes: recommendation engines, smart IoT sensors, bots, personalized customer interactions, or any type of automated business decision.

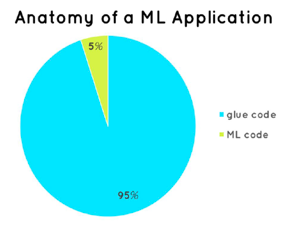

Another challenge that early adopters are encountering is solely focusing on algorithms and scoring, while ignoring many of the required supporting capabilities needed to manage analytic decisioning at scale. This issue was the primary focus of the Google ML teams whitepaper “Machine Learning: The High-Interest Credit Card of Technical Debt” (D. Sculley, et al.). This paper correctly identified that while a huge amount of focus has been pointed at enabling ML algorithms, those algorithms only comprise a small fraction of the overall code needed to support the algorithms in machine learning enabled applications.

The Google team defined the same ratio that Quickpath has experienced in developing ML applications for our customers for over 20 years: less than 5% of the code in a machine learning application was actually ML code, while the other 95% was “Analytic Glue” code.

This “Analytic Glue” addresses critical capabilities such as data pipelines, feature extraction, experimentation, simulation and validation, deployment, real-time monitoring, and learning loop automation. Quickpath’s Applied Intelligence Platform provides powerful features that enable these capabilities and help make real-time decision automation a reality. Other solutions help deploy models but disregard the critical features represented by this “Analytic Glue” that often aren’t obvious to teams working to enable their first few machine learning applications.

Recognizing this inability for teams to achieve streamlined production integrations and deployment, Quickpath designed an alternative solution. By providing data scientists a comprehensive Applied Intelligence Platform, we could drastically reduce the time between model development and deployment from months to hours — the financial impact would be radical, followed closely by the impact on the data science team’s sanity. In order to achieve this, data scientists and engineers need an integrated solution providing a collaborative platform between the popular, yet disparate frameworks and the final interaction points to which they would be deployed. The environment would need to allow data and machine learning engineers to test and score models while simultaneously looping feedback from the interaction points; it would have to serve as a repository, performance monitoring center, and allow simple model management. Finally, the entire functionality would need to be captured in a modern, intuitive dashboard, to help bridge the persistent communications gap between data teams and partnering executives or clients.

Organizations are realizing that effective departments don’t function in chaos — organized chaos, maybe, but not plain chaos — and that data science is no different. It’s no secret that most corporate AI/ML projects never live beyond the test phase, and it’s not a coincidence that ML Ops began gaining traction in 2020. In order to successfully navigate the waters in a nascent and complex field, operations are perhaps the most integral ingredient.

It’s not unusual to hear executives discuss future-proofing a company, anticipating the next unexpected turn. Some leaders even pursue AI/ML projects to this end, treating data projects like corporate storm cellars, separate from the bottom line. However, the rate of change is accelerating, and the once-distant future of data-driven enterprises is now firmly a reality for many leading brands.

Quickpath addresses these success factors by enabling a highly repeatable and easily manageable bridge from the offline analytics environments used to develop and train models to the online production environments where business value can be realized. Our open architecture and countless data and analytic adaptors enable a robust experimentation capability that allows data scientists to define a crawl, walk, run approach to machine learning adoption in business decisioning.

About the author:

Alex Fly is the Co-founder and CEO of Quickpath, an Applied Intelligence company focused on enabling businesses to make automated, intelligent decisions throughout their organizations using machine learning and artificial intelligence. He is an experienced speaker, thought-leader, and industry expert in leveraging applied intelligence to automate and optimize business processes and customer interactions. Alex is a trusted partner to Quickpath’s customers, helping them deliver real-time data and analytic products that provide massive business value across their organizations.

Alex’s Career milestones include:

- Worked with 100+ Fortune 1000 companies

- Developed expert domain knowledge in Banking, Insurance, Retail, Marketing, and Sales

- Enabled 10s of billions of real-time ML-driven decisions

- Presented at major data and analytic conferences

- Advisory Board Member to several University and Bootcamp Data Science Programs

Interested in hearing more from our experts? you can see our previous experts blog series below:

Top AI Resources - Directory for Remote Learning

10 Must-Read AI Books in 2020

13 ‘Must-Read’ Papers from AI Experts

Top AI & Data Science Podcasts

30 Influential Women Advancing AI in 2019

‘Must-Read’ AI Papers Suggested by Experts - Pt 2

30 Influential AI Presentations from 2019

AI Across the World: Top 10 Cities in AI 2020

Female Pioneers in Computer Science You May Not Know

10 Must-Read AI Books in 2020 - Part 2

Top Women in AI 2020 - Texas Edition

2020 University/College Rankings - Computer Science, Engineering & Technology

How Netflix uses AI to Predict Your Next Series Binge - 2020

Top 5 Technical AI Presentation Videos from January 2020

20 Free AI Courses & eBooks

5 Applications of GANs - Video Presentations You Need To See

250+ Directory of Influential Women Advancing AI in 2020

The Isolation Insight - Top 50 AI Articles, Papers & Videos from Q1

Reinforcement Learning 101 - Experts Explain

The 5 Most in Demand Programming Languages in 2020